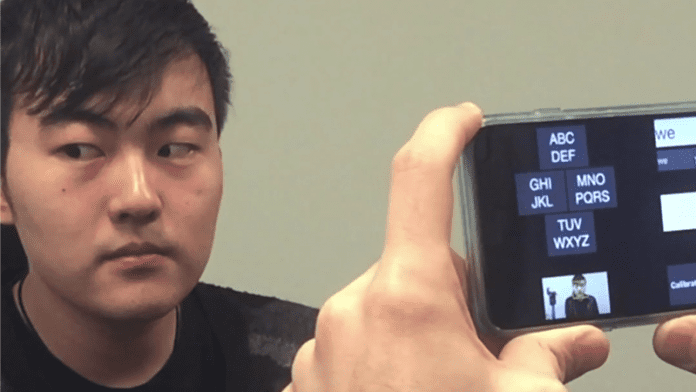

Microsoft’s researchers have developed an app that could help people speak the language of eyes. GazeSpeak is a smartphone app that has been created by researchers while working with Microsoft. It can interpret eye gestures in real time and can predict what the person is trying to say. The app does the simple job. It divides alphabets into the grid of four boxes which are visible to the individual who has ALS via a sticker on the back of the device. The sufferer needs to look at the stickers, and the app can register the response corresponding to them looking up, right, left and down. Xiaoyi Zhang, intern at Microsoft Research, explained “For example, to say the word ‘task’ they first look down to select the group containing ‘t’, then up to select the group containing ‘a’, and so on,” GazeSpeak then uses AI and predictive texting to guess the word they were going for. Meredith Morris at Microsoft Research in Redmond, Washington said: “We’re using computer vision to recognize the eye gestures, and AI to do the word prediction.” Well, the other method that is used to communicate with patients suffering from this disease is by writing letters on a board and tracking eye movement. But, GazeSpeak beats all another method by taking an average of 78 seconds as opposed to existing methods which takes 123 seconds to complete a sentence. According to the reports, Microsoft is going to launch the app for iOS devices before the Conference on Human Factors in Computing system which is going to hold in May 2017, the source code of the app would be freely available. So, what do you think about this? Share your views in the comment box below!

Δ